Citation analysis is based on four implicit dimensions. These are productivity, visibility, reputation, and impact. Each of these has been discussed either directly or indirectly in the scholarly communications and citation analysis literature, but not explicitly in terms of faculty evaluation criteria. This is primarily due to the fact that the application of webometrics (discussed here) departs from the control and domain of academic publishing companies as sources of reputational metrics. The following is a brief discussion of each.

Productivity

Academic “productivity” typically only refers to research and refereed publication activities, not to teaching or outreach. As a simple quantitative measure (i.e., numeric count) of artifacts, including books, chapters, articles, presentations, grants, etc., productivity is the traditional method of evaluating academic output (Leahey 2007; Adkins and Budd 2006). There are few reliable metrics for productive teaching or outreach other than the output or count of activities – like student credit hours, contact hours, or listing internal or external committee/board memberships (Massy 2010). Productivity is easily derived from a CV by simply counting each of the artifacts or activities listed. In some cases, the number of journal article citations (and more recently this has included books and book chapters) and journal impact factors (JIF) to convey the weight, importance, or recognition of the work are included within CVs. However, these metrics only apply to published materials that are indexed by citation databases. While traditionally important, these products only account for a portion of what is commonly expected of tenure track faculty, missing the rest of the academic footprint which includes: their dissertation, book reviews, conference presentations (and proceedings), research reports, grant activity, and teaching activities (Youn and Price 2009). There are subjective ways to evaluate the quality and importance of these works, but not in ways similar to bibliometrics. Counting these products is a very limited way to assess academic output.

The meaning of “productivity” is also discipline specific, where expectations for research activity, scholarly publication, and other creative works vary (Dewett and Denisi 2004). Some disciplines have devised weighting systems used to show how specific activities or outputs are counted relative to promotion and tenure, or merit-pay evaluation (see for example Davis and Rose 2011; Mezrich and Nagy 2007). While controversial, academic activities and productivity have funding implications for public universities in the eyes of state legislatures and the public, especially during challenging economic times (see Musick 2011; Townsend and Rosser 2007; Webber 2011; O’Donnell 2011). As public universities become increasingly more self-reliant for funding, they may need to adopt more of a private business model for accountability (Adler and Harzing 2009). Pressure to dissolve tenuring systems that protect “unproductive” faculty members should be confronted constructively and creatively instead of being dismissed out of hand.

Visibility

Traditionally, academic visibility was assumed to be a function of productivity. As Leahey (2007) argues, like productivity, visibility is also a form of social capital. If an academic is prolific, then there is a greater likelihood more academics will be aware of them, leading to other opportunities for professional gain. Pre-internet visibility included the number of books sold, journal or publication impact based on where an article appeared, or through conference presentations with large attendance (depending on the prestige and popularity of the conference). Visibility could also include newspaper, radio, or even television references, but not too common for the typical academic. On the other hand, the web provides visibility and the ability to reach far beyond traditional academic borders. And as an electronic archive, web visibility can be measured through searches that count the number of web mentions, web pages, or web links to an academic product. Academics who strategically publish their work on the internet (personal pages, blogs, institutional repositories, etc) will have greater visibility (Beel, Gipp, and Wilde 2010). Self promotion can benefit an academic's discipline, institution, and academic unit. However, visibility is distinct from productivity and reputation because it provides little indication about the quality of the work.

Leahey (2007) finds that productivity is positively correlated with visibility and that visibility in turn has a positive personal impact in the form of compensation for faculty members. There are other benefits to academic visibility as well, including attracting good students, internal and external financial support for research, and departmental growth because of increased enrollments and additional departmental resources (Baird 1986). The link between productivity and visibility is also expressed through, and motivated by, the promotion and tenure process which secures lifetime employment and other benefits. But once tenure is granted, the challenge for some departments is to have faculty continue to be productive and be creative in promoting their work for the benefit of academic units.

Reputation

Web 2.0, or social web, provides the means for generating reputation metrics through online user behavior (Priem and Hemminger 2010). This includes social bookmarking (Taraborelli 2008), social collection management (Neylon and Wu 2009), social recommendations (Heck, Peters, and Stock 2010), publisher-hosted comment spaces (Adie 2009), microblogging (Priem and Costello 2010), user edited references (Adie 2009), blogs (Hsu and Lin 2007), social networks (Roman 2011), data repositories (Knowlton 2011), and social video (Anderson 2009). All of these modes rely on users to view, tag, comment, download, share, or store academic output on the web whereby usage metrics can be tracked. This requires meaningful interaction with the content, for which there is not a clear incentive structure for users (Cheverie, Boettcher, and Buschman 2009).

Benefits include potentially faster feedback and broader assessment of impact on audiences (Priem and Hemminger 2010). However, these “audiences” may not have any particular level of validity or authority. In terms of webometric analysis, reputation, recognition, and prestige are related. These refer to the number or rank of sites that mention an academic's work. While the total number of links or mentions indicates the level of visibility or accessibility of scholarly artifacts, being recognized by esteemed researchers and institutions is a measure of value in the academic information market. This same concept is used in citation analysis where more weight and value is placed on citations, where the authors of citing articles have themselves been cited often. Just as in the traditional tenure review process, positive external reference letters carry more weight when they come from well known and respected individuals in the discipline. Popularity and respect are manifest on the web by the amount of attention gained either through back links or traffic. It can be argued that the amount of attention gained by a person does not reflect the quality of their work within their disciplines; however, positive citations and positive book reviews have been relied upon for years, for lack of other metrics.

Impact

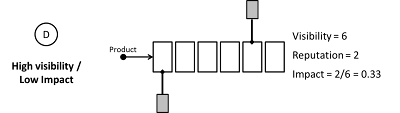

Along with productivity, visibility, and reputation, impact is the fourth dimension. The impact measure takes into account reputation per academic product or artifact. In other words, “impact” expresses the amount of attention generated by an article, chapter, report, presentation, etc. across an academic's career. One could assume that impact is always higher for senior faculty because their work has been in circulation for a longer amount of time compared to younger faculty. But time likely has a bigger effect on visibility and not necessarily reputation. High visibility (i.e., wide availability) can influence reputational characteristics, but in ways different than positive reviews from respected colleagues. In cyberspace, reputation is gained by having others express interest in an academic product by referring (or linking) to it.

Kousha, Thelwall, and Rezaie (2010) refer to formal and informal online impact. Formal impact being that measured by sources such as GS for citations and informal impact being associated with gray sources such as online course syllabi, scholarly presentations (conference or seminar presentations), and blog impact. They also conclude that informal online impact is significant and increasing in several disciplines. Another approach, “altmetrics” (see altmetrics.org) is “the creation and study of new metrics based on the social web for analyzing, and informing scholarship” (Priem, Taraborelli, Groth, and Neylon 2010, 1). To assess scholarly impact, including measures of usage (downloads and views), peer-review (expert opinion), citations, and alt-metrics (storage, links, bookmarks, conversations). Kousha et al (2010), Priem et al (2010), and Bollen Rodriguez, and Van de Sompel (2007) make strong cases for usage-based metrics, but do not emphasize the full range of academic outputs as suggested in this article.

An academic product (e.g. journal article mention) may appear on three web pages, and in Case A only one of these pages has backlinks (links from other web pages) and in another Case B the same product, the same visibility, with nine backlinks. So while in both cases a product has the same amount of visibility, but Case B expresses a higher level of reputation, and hence, impact. It can also be possible that lower visibility products can actually have a higher impact than a higher visibility product (see Cases C and D). It is unclear at this point if there is a relationship between measures of visibility and reputation due to the lack of empirical data. A product needs to first be available and visible to gain attention and be valued by peers or the public. However, low quality, very visible work will not have high impact as described here. The point is that visibility is not an end in itself (see Franceschet 2010; Dewett and Denisi 2004) and that mentions or linking are a better measures because they signify high quality and impact.

The key points thus far are that, a) the web contains far more types of academic output compared to traditional citation databases, b) urban planning and other social sciences should recognize the value of gray literature (discussed here) to their disciplines and pay attention to these for faculty evaluation through webometrics, and c) expect that a web presence and visibility will increase in importance over time as academic programs compete for increasingly scarce resources. Academics should see the advantages of web visibility and use it to more broadly for scholarly communications while at the same time use it to assess scholarly impact.

References

Adie, E. 2009. Commenting on scientific articles (PLoS edition). Nascent. http://blogs.nature.com/wp/nascent/2009/02/commenting_on_scientific_artic.html.

Adkins, D., and J. Budd. 2006. “Scholarly productivity of US LIS faculty.” Library & Information Science Research 28 (3): 374-389.

Adler, N. J, and A. W Harzing. 2009. “When knowledge wins: Transcending the sense and nonsense of academic rankings.” The Academy of Management Learning and Education ARCHIVE 8 (1): 72-95.

Anderson, K. 2009. The Impact Factor: A Tool from a Bygone Era? The Scholarly Kitchen. http://scholarlykitchen.sspnet.org/2009/06/29/is-the-impact-factor-from-a-bygone-era/.

Baird, L. L. 1986. “What characterizes a productive research department?” Research in Higher Education 25 (3): 211-225.

Beel, J., B. Gipp, and E. Wilde. 2010. “Academic search engine optimization (ASEO).” Journal of Scholarly Publishing 41 (2): 176-190.

Davis, E. B, and J. T Rose. 2011. “Converting Faculty Performance Evaluations Into Merit Raises: A Spreadsheet Model.” Journal of College Teaching & Learning (TLC) 1 (2).

Dewett, T., and A. S Denisi. 2004. “Exploring scholarly reputation: It’s more than just productivity.” Scientometrics 60 (2): 249-272.

Falagas, M. E, E. I Pitsouni, G. A Malietzis, and G. Pappas. 2008. “Comparison of PubMed, Scopus, web of science, and Google scholar: strengths and weaknesses.” The FASEB Journal 22 (2): 338-342.

Franceschet, M. 2010. “The difference between popularity and prestige in the sciences and in the social sciences: A bibliometric analysis.” Journal of Informetrics 4 (1): 55-63.

Heck, T., and I. Peters. 2010. Expert recommender systems: Establishing Communities of Practice based on social bookmarking systems. In Proceedings of I-Know, 458–464.

Hsu, C. L, and J. C.C Lin. 2008. “Acceptance of blog usage: The roles of technology acceptance, social influence and knowledge sharing motivation.” Information & Management 45 (1): 65-74.

Knowlton, A. 2011. Internet Usage Data. , Communication Studies Theses, Dissertations, and Student Research. Retrieved from http://digitalcommons.unl.edu/commstuddiss/17

Kousha, K. 2005. “Webometrics and Scholarly Communication: An Overview.” Quarterly Journal of the National Library of Iran [online] 14 (4).

Kousha, K., M. Thelwall, and S. Rezaie. 2010. “Using the web for research evaluation: The Integrated Online Impact indicator.” Journal of Informetrics 4 (1): 124-135.

Leahey, E. 2007. “Not by productivity alone: How visibility and specialization contribute to academic earnings.” American sociological review 72 (4): 533-561.

Massy, W. F. 2010. “Creative Paths to Boosting Academic Productivity.”

Mezrich, R., and P. G Nagy. 2007. “The academic RVU: a system for measuring academic productivity.” Journal of the American College of Radiology 4 (7): 471-478.

Musick, Marc A. 2011. An Analysis of Faculty Instructional and Grant-based Productivity at The University of Texas at Austin. Austin, TX. http://www.utexas.edu/news/attach/2011/campus/32385_faculty_productivity.pdf.

Neuhaus, C., and H. D Daniel. 2008. “Data sources for performing citation analysis: an overview.” Journal of Documentation 64 (2): 193-210.

Neylon, C., and S. Wu. 2009. “Article-level metrics and the evolution of scientific impact.” PLoS biology 7 (11): e1000242.

O’Donnell, R. 2011. Higher Education’s Faculty Productivity Gap : The Cost to Students , Parents & Taxpayers. Austin, TX.

Priem, J., and K. L Costello. 2010. “How and why scholars cite on Twitter.” Proceedings of the American Society for Information Science and Technology 47 (1): 1-4.

Priem, J., and B. H Hemminger. 2010. “Scientometrics 2.0: New metrics of scholarly impact on the social Web.” First Monday 15 (7).

Priem, J., D. Taraborelli, P. Groth, and C. Neylon. 2010. alt-metrics: A manifesto. Web. http://altmetrics.org/manifesto.

Roman, D. 2011. Scholarly publishing model needs an update. Communications of the ACM, 54(1), 16. Retrieved from http://dl.acm.org/ft_gateway.cfm?id=1866744&type=html

Taraborelli, D. 2008. Soft peer review: Social software and distributed scientific evaluation. In Proceedings of the 8th International Conference on the Design of Cooperative Systems COOP 08. Institut d’Etudes Politiques d’Aix-en-Provence.

Townsend, B. K., & Rosser, V. J. 2007. Workload issues and measures of faculty productivity. Thought & Action, 23, 7-19.

Webber, K. L. 2011. “Measuring Faculty Productivity.” University Rankings: 105-121.

Youn, T. I.K, and T. M Price. 2009. “Learning from the experience of others: The evolution of faculty tenure and promotion rules in comprehensive institutions.” The Journal of Higher Education 80 (2): 204-237.

Academic Visibility

Wednesday, November 13, 2013

Monday, October 14, 2013

Webometrics and Research Impact Analysis

The field of webometrics grew out of citation analysis, bibliometrics, and scientometrics (also referred to as cybermetrics and informetrics). Webometrics is “the study of web based content with primarily quantitative methods for social science research goals using techniques that are not specific to one field of study” (Thelwall 2009, 6). Drawing from the early citation analysis work of Garfield (1972) for journal evaluation, Almind and Ingwersen (1997) are credited with the term “webometrics” and illustrated how scholarly web artifacts can be assessed in terms of visibility and relationships to each other – much like that of traditional citation analysis (Thelwall 2009; Björneborn and Ingwersen 2001; Björneborn and Ingwersen 2004). This literature includes extensive discussion of web impact analysis, search engine optimization, link analysis, and tools like SocSciBot, web crawlers, LexiURL Searcher (now Thelwall’s Webometric Analyst), web traffic rankings, page ranking, and citation networks. The web has become a global publishing platform with very sophisticated indexing and citation analysis capabilities (Jalal, Biswas, and Mukhopadhyay 2009; Kousha and Thelwall 2009).

The interconnectedness of web information is especially suited to scholarly communications where web mentions (i.e., citations), types of links between web pages (relationships), and the resulting network dynamics produce quantifiable metrics for scholarly impact, usage, and lineage (Kousha 2005; Thelwall 2009; Bollen, Rodriquez, and Van de Sompel 2007). Webometrics can be used to analyze material posted to the web and the network structure of references to academic work by not only determining the frequency of citation, but also rank or score of these mentions by the weight or popularity of the referring hyperlinks. The resulting metrics (linkages, citations, mentions, usage, etc.) are analogous to reputation systems derived from traditional citation analysis procedures. This has since been operationalized for citation analysis with web-based tools such as Harzing’s “Publish or Perish” and the University of Indiana’s “Scholarometer.” These tools have leveraged the power and accessibility of GS to exceed that of proprietary indices like ISI-Web of Science and Scopus (see Hoang, Kaur, Menczer 2010; Harzing and van der wal 2009; Moed 2009; Falagas, Pitsouni, Malietzis, and Pappas 2008; Neuhaus and Daniel 2008; and MacRoberts and MacRoberts 2010).

Nearly all of the literature on webometrics related to scholarly evaluation replicates traditional citation analysis. Much of this research explores whether open-access indices, especially GS, can produce similar citation metrics as that of ISI and Scopus citation indices (see Harzing and van der Wall 2009; Kousha and Thelwall 2009; Meho and Sugimoto 2009). When GS was launched in 2004, it did not have the coverage of ISI or Scopus. That has since changed, and with GS being the most “democratic” of the three, it has shown to produce comparable results to the other two for many disciples (Harzing, 2010).

Some of the initial applications of webometrics were focused on assessing hyperlinks to estimate “web impact factors” for web sites of scientific research as well as universities as a whole (Mukhopadhyay 2004). By analyzing both outlinks and inlinks (i.e., backlinks and co-linking), the volume, reach, and hierarchy of web sites through the structure of the Domain Name System (DNS), for instance, the top-level domains, sub-level domains, and host (or site) level domains can determine the country , organization type, and page context of these links (see Thelwall 2004 for further discussion). This information can be extracted to derive the network relationships among the many web sites much like a social network. This network approach to web site relationships can also be applied to scholarly artifacts that appear or are referenced on the web, and indices or search engines navigate databases of link structures, much like that proposed by Garfield (1955) for citation indexing (Neuhaus and Daniel 2006).

Thelwall, Klitkov, Verbeek, Stuart, and Vincent (2010) point out the challenge faced by including gray literature stating, “A big disadvantage of link analysis webometrics, in contrast to citation analysis, is that web publishing is heterogeneous, varying from spam to post-prints. As a result, the quality of the indicators produced is typically not high unless irrelevant content is manually filtered out, and the results also tend to reflect a range of phenomena rather than just research impact” (p.2). Because there are no standardized citation-like databases for gray literature, in particular, there is not the same level of control over how artifacts are cited on the web. This issue will be solved as webometrics becomes more fully utilized.

Unlike citation analysis and bibliometrics which focus on references to books, chapters, and journal articles by a small audience of academics, this approach encompasses a greater portion of the scholarly footprint by including some of the gray literature and non-refereed output of faculty members. The metrics that will be discussed delineate four dimensions that are implicit to the spirit of citation analyses. These are productivity, visibility, reputation, and impact. Each of these has been discussed either directly or indirectly in the scholarly communications and citation analysis literature, but not explicitly in terms of faculty evaluation criteria. This is primarily due to the fact that the application of webometrics departs from the control and domains of academic publishing companies and academics themselves as sources of reputational measures. My next blog post will briefly discuss each of these.

References

Almind, T. C, and P. Ingwersen. 1997. “Informetric analyses on the World Wide Web: methodological approaches to ‘Webometrics’.” Journal of documentation 53 (4): 404-426.

Bollen, J., M. A Rodriguez, H. Van de Sompel, L. L Balakireva, and A. Hagberg. 2007. The largest scholarly semantic network... ever. In Proceedings of the 16th international conference on World Wide Web, 1247-1248. ACM.

Björneborn, L., and P. Ingwersen. 2001. “Perspective of webometrics.” Scientometrics 50 (1): 65-82.

Björneborn, L., and P. Ingwersen. 2004. “Toward a basic framework for webometrics.” Journal of the American Society for Information Science and Technology 55 (14): 1216-1227.

Falagas, M. E, E. I Pitsouni, G. A Malietzis, and G. Pappas. 2008. “Comparison of PubMed, Scopus, web of science, and Google scholar: strengths and weaknesses.” The FASEB Journal 22 (2): 338-342.

Garfield, E. 1955. “Citation indexes to science: a new dimension in documentation through association of ideas.” Science 122: 108-111.

Garfield, E. 1972. Citation analysis as a tool in journal evaluation. In American Association for the Advancement of Science.

Harzing, A. W. 2010. The publish or perish book. Tarma Software Research.

Harzing, A. W, and R. van der Wal. 2009. “A Google Scholar h‐index for journals: An alternative metric to measure journal impact in economics and business.” Journal of the American Society for Information Science and Technology 60 (1): 41-46.

Hoang, D. T, J. Kaur, and F. Menczer. 2010. “Crowdsourcing scholarly data.”

Jalal, S. K, S. C Biswas, and P. Mukhopadhyay. 2009. “Bibliometrics to webometrics.” Information Studies 15 (1): 3-20.

Kousha, K. 2005. “Webometrics and Scholarly Communication: An Overview.” Quarterly Journal of the National Library of Iran [online] 14 (4).

Kousha, K., and M. Thelwall. 2009. “Google Book Search: Citation analysis for social science and the humanities.” Journal of the American Society for Information Science and Technology 60 (8): 1537-1549.

MacRoberts, M. H., and B. R. MacRoberts. 2010. “Problems of citation analysis: A study of uncited and seldom‐cited influences.” Journal of the American Society for Information Science and Technology 61 (1): 1-12.

Meho, L. I, and C. R Sugimoto. 2009. “Assessing the scholarly impact of information studies: A tale of two citation databases—Scopus and Web of Science.” Journal of the American Society for Information Science and Technology 60 (12): 2499-2508.

Moed, H. F. 2009. “New developments in the use of citation analysis in research evaluation.” Archivum immunologiae et therapiae experimentalis 57 (1): 13-18.

Mukhopadhyay, Parthasarathi. 2004. “Measuring Web Impact Factors : A Webometric Study based on the Analysis of Hyperlinks.” Library and Information Science: 1-12.

Neuhaus, C., and H. D Daniel. 2008. “Data sources for performing citation analysis: an overview.” Journal of Documentation 64 (2): 193-210.

Thelwall, M. 2004. Link analysis: An information science approach. Academic Press.

Thelwall, M. 2009. “Introduction to webometrics: Quantitative web research for the social sciences.” Synthesis lectures on information concepts, retrieval, and services 1 (1): 1-116.

Thelwall, M., A. Klitkou, A. Verbeek, D. Stuart, and C. Vincent. 2010. “Policy‐relevant Webometrics for individual scientific fields.” Journal of the American Society for Information Science and Technology 61 (7): 1464-1475.

The interconnectedness of web information is especially suited to scholarly communications where web mentions (i.e., citations), types of links between web pages (relationships), and the resulting network dynamics produce quantifiable metrics for scholarly impact, usage, and lineage (Kousha 2005; Thelwall 2009; Bollen, Rodriquez, and Van de Sompel 2007). Webometrics can be used to analyze material posted to the web and the network structure of references to academic work by not only determining the frequency of citation, but also rank or score of these mentions by the weight or popularity of the referring hyperlinks. The resulting metrics (linkages, citations, mentions, usage, etc.) are analogous to reputation systems derived from traditional citation analysis procedures. This has since been operationalized for citation analysis with web-based tools such as Harzing’s “Publish or Perish” and the University of Indiana’s “Scholarometer.” These tools have leveraged the power and accessibility of GS to exceed that of proprietary indices like ISI-Web of Science and Scopus (see Hoang, Kaur, Menczer 2010; Harzing and van der wal 2009; Moed 2009; Falagas, Pitsouni, Malietzis, and Pappas 2008; Neuhaus and Daniel 2008; and MacRoberts and MacRoberts 2010).

Nearly all of the literature on webometrics related to scholarly evaluation replicates traditional citation analysis. Much of this research explores whether open-access indices, especially GS, can produce similar citation metrics as that of ISI and Scopus citation indices (see Harzing and van der Wall 2009; Kousha and Thelwall 2009; Meho and Sugimoto 2009). When GS was launched in 2004, it did not have the coverage of ISI or Scopus. That has since changed, and with GS being the most “democratic” of the three, it has shown to produce comparable results to the other two for many disciples (Harzing, 2010).

Some of the initial applications of webometrics were focused on assessing hyperlinks to estimate “web impact factors” for web sites of scientific research as well as universities as a whole (Mukhopadhyay 2004). By analyzing both outlinks and inlinks (i.e., backlinks and co-linking), the volume, reach, and hierarchy of web sites through the structure of the Domain Name System (DNS), for instance, the top-level domains, sub-level domains, and host (or site) level domains can determine the country , organization type, and page context of these links (see Thelwall 2004 for further discussion). This information can be extracted to derive the network relationships among the many web sites much like a social network. This network approach to web site relationships can also be applied to scholarly artifacts that appear or are referenced on the web, and indices or search engines navigate databases of link structures, much like that proposed by Garfield (1955) for citation indexing (Neuhaus and Daniel 2006).

Thelwall, Klitkov, Verbeek, Stuart, and Vincent (2010) point out the challenge faced by including gray literature stating, “A big disadvantage of link analysis webometrics, in contrast to citation analysis, is that web publishing is heterogeneous, varying from spam to post-prints. As a result, the quality of the indicators produced is typically not high unless irrelevant content is manually filtered out, and the results also tend to reflect a range of phenomena rather than just research impact” (p.2). Because there are no standardized citation-like databases for gray literature, in particular, there is not the same level of control over how artifacts are cited on the web. This issue will be solved as webometrics becomes more fully utilized.

Unlike citation analysis and bibliometrics which focus on references to books, chapters, and journal articles by a small audience of academics, this approach encompasses a greater portion of the scholarly footprint by including some of the gray literature and non-refereed output of faculty members. The metrics that will be discussed delineate four dimensions that are implicit to the spirit of citation analyses. These are productivity, visibility, reputation, and impact. Each of these has been discussed either directly or indirectly in the scholarly communications and citation analysis literature, but not explicitly in terms of faculty evaluation criteria. This is primarily due to the fact that the application of webometrics departs from the control and domains of academic publishing companies and academics themselves as sources of reputational measures. My next blog post will briefly discuss each of these.

References

Almind, T. C, and P. Ingwersen. 1997. “Informetric analyses on the World Wide Web: methodological approaches to ‘Webometrics’.” Journal of documentation 53 (4): 404-426.

Bollen, J., M. A Rodriguez, H. Van de Sompel, L. L Balakireva, and A. Hagberg. 2007. The largest scholarly semantic network... ever. In Proceedings of the 16th international conference on World Wide Web, 1247-1248. ACM.

Björneborn, L., and P. Ingwersen. 2001. “Perspective of webometrics.” Scientometrics 50 (1): 65-82.

Björneborn, L., and P. Ingwersen. 2004. “Toward a basic framework for webometrics.” Journal of the American Society for Information Science and Technology 55 (14): 1216-1227.

Falagas, M. E, E. I Pitsouni, G. A Malietzis, and G. Pappas. 2008. “Comparison of PubMed, Scopus, web of science, and Google scholar: strengths and weaknesses.” The FASEB Journal 22 (2): 338-342.

Garfield, E. 1955. “Citation indexes to science: a new dimension in documentation through association of ideas.” Science 122: 108-111.

Garfield, E. 1972. Citation analysis as a tool in journal evaluation. In American Association for the Advancement of Science.

Harzing, A. W. 2010. The publish or perish book. Tarma Software Research.

Harzing, A. W, and R. van der Wal. 2009. “A Google Scholar h‐index for journals: An alternative metric to measure journal impact in economics and business.” Journal of the American Society for Information Science and Technology 60 (1): 41-46.

Hoang, D. T, J. Kaur, and F. Menczer. 2010. “Crowdsourcing scholarly data.”

Jalal, S. K, S. C Biswas, and P. Mukhopadhyay. 2009. “Bibliometrics to webometrics.” Information Studies 15 (1): 3-20.

Kousha, K. 2005. “Webometrics and Scholarly Communication: An Overview.” Quarterly Journal of the National Library of Iran [online] 14 (4).

Kousha, K., and M. Thelwall. 2009. “Google Book Search: Citation analysis for social science and the humanities.” Journal of the American Society for Information Science and Technology 60 (8): 1537-1549.

MacRoberts, M. H., and B. R. MacRoberts. 2010. “Problems of citation analysis: A study of uncited and seldom‐cited influences.” Journal of the American Society for Information Science and Technology 61 (1): 1-12.

Meho, L. I, and C. R Sugimoto. 2009. “Assessing the scholarly impact of information studies: A tale of two citation databases—Scopus and Web of Science.” Journal of the American Society for Information Science and Technology 60 (12): 2499-2508.

Moed, H. F. 2009. “New developments in the use of citation analysis in research evaluation.” Archivum immunologiae et therapiae experimentalis 57 (1): 13-18.

Mukhopadhyay, Parthasarathi. 2004. “Measuring Web Impact Factors : A Webometric Study based on the Analysis of Hyperlinks.” Library and Information Science: 1-12.

Neuhaus, C., and H. D Daniel. 2008. “Data sources for performing citation analysis: an overview.” Journal of Documentation 64 (2): 193-210.

Thelwall, M. 2004. Link analysis: An information science approach. Academic Press.

Thelwall, M. 2009. “Introduction to webometrics: Quantitative web research for the social sciences.” Synthesis lectures on information concepts, retrieval, and services 1 (1): 1-116.

Thelwall, M., A. Klitkou, A. Verbeek, D. Stuart, and C. Vincent. 2010. “Policy‐relevant Webometrics for individual scientific fields.” Journal of the American Society for Information Science and Technology 61 (7): 1464-1475.

Wednesday, September 11, 2013

The Importance of Gray Literature

Standard citation analysis metrics (number of citations, h-index, g-index, etc.) are within the bounds of the Web of Knowledge (WoK), Scopus, and Google Scholar (GS) domains. Academics in urban planning should consider the web (i.e., webometrics, discussed later) for books, book chapters, and journal articles, as well as academic gray literature that is produced and consumed by planning academics and planning practitioners. This includes the rest of the academic footprint such as research reports, conference presentations, conference proceedings, and funded research grant materials. Course syllabi are an additional source that are available on the web and often cite academic work and other gray literature on planning topics. Other examples of gray literature for planning academics include studio or workshop projects that are posted to the web and often take the form of professional consulting reports.

It is very likely that blog posts and mentions will become recognized gray literature while potentially becoming accepted as academic products to be evaluated along with other scholarly artifacts. In their discussion about blogging for untenured professors, Hurt and Yin (2006) mention that blogging represents a form of “pre-scholarship” where the contents may be the kernels of future articles (Hurt and Yin 2006, 15). Some planning academics already report contributions to sites such as Planetizen.com on their curriculum vitae (CV) under the heading of “other publications”. The legitimacy of these postings is evidenced by the citation, download, and amount of forum discussion from a mix of planning academics and professionals. It is likely that many of the 50% of non-publishing planning academics that Stiftel, Rukmana, and Alam (2004) mention are producing worthy gray literature that is valuable to planning pedagogy but goes unnoticed by traditional citation analysis and bibliometrics.

References

Hurt, C., and T. Yin. 2006. “Blogging While Untenured and Other Extreme Sports.” Wash. UL Rev. 84: 1235.

Stiftel, B., D. Rukmana, and B. Alam. 2004. “A National Research Council-Style Study.” Journal of Planning Education and Research 24 (1): 6-22.

Next entry: Webometrics

It is very likely that blog posts and mentions will become recognized gray literature while potentially becoming accepted as academic products to be evaluated along with other scholarly artifacts. In their discussion about blogging for untenured professors, Hurt and Yin (2006) mention that blogging represents a form of “pre-scholarship” where the contents may be the kernels of future articles (Hurt and Yin 2006, 15). Some planning academics already report contributions to sites such as Planetizen.com on their curriculum vitae (CV) under the heading of “other publications”. The legitimacy of these postings is evidenced by the citation, download, and amount of forum discussion from a mix of planning academics and professionals. It is likely that many of the 50% of non-publishing planning academics that Stiftel, Rukmana, and Alam (2004) mention are producing worthy gray literature that is valuable to planning pedagogy but goes unnoticed by traditional citation analysis and bibliometrics.

References

Hurt, C., and T. Yin. 2006. “Blogging While Untenured and Other Extreme Sports.” Wash. UL Rev. 84: 1235.

Stiftel, B., D. Rukmana, and B. Alam. 2004. “A National Research Council-Style Study.” Journal of Planning Education and Research 24 (1): 6-22.

Next entry: Webometrics

Sunday, September 1, 2013

Academic Visibility for Urban Planning

The role of the web for academic research cannot be overstated. Both as a source and destination for scholarship, cyberspace acts as a market for consumers of research, especially for disciplines with popular appeal and applicability. Urban planning is an excellent example of such a discipline. With its professional orientation focusing on the well-being of neighborhoods, cities, regions, and nation, our work is shared among academics and has practical implications that are debated and ultimately implemented (or not) by the public (Stiftel and Mogg 2007). A public well informed about community and regional policies and planning activities can be a desirable end in itself and is international in scale as well (Stiftel and Mukhopadhyay 2007). In addition, justifying tax dollars spent to support scholarly activity should be of great concern to faculty. Cyberspace is and will increasingly be the means by which planning academics promote their contributions to the profession.

A primary activity of academics is discovery through research. Discovery occurs as new thoughts, ideas, or perspectives develop through the research process. These new thoughts, ideas, or perspectives first take place in the mind but must be expressed in a tangible way to be useful to others. Marchionini (2010) describes this process as converting the mental to the physical in the form of useable information. For social scientists, we commonly see the physical expression of these “artifacts” as books, chapters, journal articles, and other types of published reports and documents. More recently these artifacts are in electronic form as blog entries, online articles, electronic multi-media, or other web-based products. As Stiftel and Mogg (2007) argued, the electronic realm has revolutionized scholarly communications for planning academics.

In addition to being a source of research information and a means of dissemination, the web also serves as a vehicle for scholarly evaluation. Traditional quantitative measures of academic output have been used to assess performance, especially in terms of academic promotion and tenure. The message of “publish or perish” within academia stresses the importance of scholarship during the review process. Productivity is a critical factor when arguing for scarce resources, comparing academic programs, and competing in global education and research markets (Goldstein and Maier 2010; Arimoto 2011; Linton, Tierney, and Walsh 2011). Productivity measures are frequently debated and have been used to analyze salary differences between males, females, disciplines, and specialties. Perspectives on productivity are rapidly changing as new modes of electronic research formats and dissemination increase. The web has created opportunities for extending the reach of academic communications, and at the same time presenting challenges for assessing quality and value.

The traditional means of assessing academic productivity and reputation has been citation analysis. Citation analysis for scholarly evaluation has a very extensive literature that weighs appropriateness within and across disciplines as well as offering nuanced discussion of a range of metrics (see for example Garfield 1972; Garfield and Merton 1979; MacRoberts and MacRoberts 1989, 1996; Adam 2002; Moed 2005). Recently, popular metrics like the h-index, g-index, and e-index have been adopted by Google Scholar (GS) to provide web-based citation analysis previously limited to proprietary citation indexes like ISI and Scopus. This is the likely trajectory of citation analysis as open access scholarship becomes more pervasive. There is some debate, however, that GS’s inclusion of gray literature citations mean its analyses draw from a different universe of publications to assess citation frequency and lineage. This article does not dwell on traditional citation analysis techniques because it proposes an expanded approach that moves beyond the bounds of citation indices for assessing overall academic visibility and impact on the web. In short, traditional citation analysis has focused on approximately one-third of faculty activity to assess academic productivity and value (albeit an important one-third), ignoring teaching and outreach/service activities – which are also important expressions of scholarly activity.

The web serves as a repository for artifacts of scholarly information and a forum for discussion, it also provides a market for ideas with a system of feedback about the relevance, reliability, and value of information posted there. Demand or value is expressed through user behavior that generates “reputation” similar to how eBay customers score sellers and buyers, “Likes” on Facebook or social bookmarking, consumer comments, or ratings on product reliability, and page ranking methods like that of Brin and Page (1998). These can function as built-in mechanisms to evaluate many types of academic research. Planning is very well-suited to this model because demand for academic products extends beyond research circles of the discipline to the public who are frequently involved in urban planning processes.

References

Adam, D. 2002. “Citation analysis: The counting house.” Nature 415 (6873): 726-729.

Arimoto, Akira. 2011. Reaction to Academic Ranking: Knowledge Production, Faculty Productivity from an International Perspective. In University Rankings: The Changing Academy – The Changing Academic Profession in International Comparative Perspective, ed. Jung Cheol Shin, Robert K. Toutkoushian, and Ulrich Teichler, 3:229-258. Springer Netherlands. http://dx.doi.org/10.1007/978-94-007-1116-7_12.

Brin, S., and L. Page. 1998. “The anatomy of a large-scale hypertextual Web search engine.” Computer networks and ISDN systems 30 (1-7): 107-117.

Garfield, E. 1972. Citation analysis as a tool in journal evaluation. In American Association for the Advancement of Science.

Garfield, E., and R. K Merton. 1979. Citation indexing: Its theory and application in science, technology, and humanities. Vol. 8. Wiley New York.

Goldstein, H., and G. Maier. 2010. “The use and valuation of journals in planning scholarship: Peer assessment versus impact factors.” Journal of Planning Education and Research 30 (1): 66.

Linton, J. D, R. Tierney, and S. T Walsh. 2011. “Publish or Perish: How Are Research and Reputation Related?” Serials Review.

MacRoberts, M. H, and B. R MacRoberts. 1989. “Problems of citation analysis: A critical review.” Journal of the American Society for Information Science 40 (5): 342-349.

———. 1996. “Problems of citation analysis.” Scientometrics 36 (3): 435-444.

Marchionini, G. 2010. “Information Concepts: From Books to Cyberspace Identities.” Synthesis Lectures on Information Concepts, Retrieval, and Services 2 (1): 1-105.

Moed, H. F. 2005. Citation analysis in research evaluation. Vol. 9. Kluwer Academic Pub.

Stiftel, B., and R. Mogg. 2007. “A planner’s guide to the digital bibliographic revolution.” Journal of the American Planning Association 73 (1): 68-85.

Stiftel, B., and C. Mukhopadhyay. 2007. “Thoughts on Anglo-American hegemony in planning scholarship: Do we read each other’s work?” Town Planning Review 78 (5): 545-572.

Next installment: "Visibility of Planning Gray Literature"

A primary activity of academics is discovery through research. Discovery occurs as new thoughts, ideas, or perspectives develop through the research process. These new thoughts, ideas, or perspectives first take place in the mind but must be expressed in a tangible way to be useful to others. Marchionini (2010) describes this process as converting the mental to the physical in the form of useable information. For social scientists, we commonly see the physical expression of these “artifacts” as books, chapters, journal articles, and other types of published reports and documents. More recently these artifacts are in electronic form as blog entries, online articles, electronic multi-media, or other web-based products. As Stiftel and Mogg (2007) argued, the electronic realm has revolutionized scholarly communications for planning academics.

In addition to being a source of research information and a means of dissemination, the web also serves as a vehicle for scholarly evaluation. Traditional quantitative measures of academic output have been used to assess performance, especially in terms of academic promotion and tenure. The message of “publish or perish” within academia stresses the importance of scholarship during the review process. Productivity is a critical factor when arguing for scarce resources, comparing academic programs, and competing in global education and research markets (Goldstein and Maier 2010; Arimoto 2011; Linton, Tierney, and Walsh 2011). Productivity measures are frequently debated and have been used to analyze salary differences between males, females, disciplines, and specialties. Perspectives on productivity are rapidly changing as new modes of electronic research formats and dissemination increase. The web has created opportunities for extending the reach of academic communications, and at the same time presenting challenges for assessing quality and value.

The traditional means of assessing academic productivity and reputation has been citation analysis. Citation analysis for scholarly evaluation has a very extensive literature that weighs appropriateness within and across disciplines as well as offering nuanced discussion of a range of metrics (see for example Garfield 1972; Garfield and Merton 1979; MacRoberts and MacRoberts 1989, 1996; Adam 2002; Moed 2005). Recently, popular metrics like the h-index, g-index, and e-index have been adopted by Google Scholar (GS) to provide web-based citation analysis previously limited to proprietary citation indexes like ISI and Scopus. This is the likely trajectory of citation analysis as open access scholarship becomes more pervasive. There is some debate, however, that GS’s inclusion of gray literature citations mean its analyses draw from a different universe of publications to assess citation frequency and lineage. This article does not dwell on traditional citation analysis techniques because it proposes an expanded approach that moves beyond the bounds of citation indices for assessing overall academic visibility and impact on the web. In short, traditional citation analysis has focused on approximately one-third of faculty activity to assess academic productivity and value (albeit an important one-third), ignoring teaching and outreach/service activities – which are also important expressions of scholarly activity.

The web serves as a repository for artifacts of scholarly information and a forum for discussion, it also provides a market for ideas with a system of feedback about the relevance, reliability, and value of information posted there. Demand or value is expressed through user behavior that generates “reputation” similar to how eBay customers score sellers and buyers, “Likes” on Facebook or social bookmarking, consumer comments, or ratings on product reliability, and page ranking methods like that of Brin and Page (1998). These can function as built-in mechanisms to evaluate many types of academic research. Planning is very well-suited to this model because demand for academic products extends beyond research circles of the discipline to the public who are frequently involved in urban planning processes.

References

Adam, D. 2002. “Citation analysis: The counting house.” Nature 415 (6873): 726-729.

Arimoto, Akira. 2011. Reaction to Academic Ranking: Knowledge Production, Faculty Productivity from an International Perspective. In University Rankings: The Changing Academy – The Changing Academic Profession in International Comparative Perspective, ed. Jung Cheol Shin, Robert K. Toutkoushian, and Ulrich Teichler, 3:229-258. Springer Netherlands. http://dx.doi.org/10.1007/978-94-007-1116-7_12.

Brin, S., and L. Page. 1998. “The anatomy of a large-scale hypertextual Web search engine.” Computer networks and ISDN systems 30 (1-7): 107-117.

Garfield, E. 1972. Citation analysis as a tool in journal evaluation. In American Association for the Advancement of Science.

Garfield, E., and R. K Merton. 1979. Citation indexing: Its theory and application in science, technology, and humanities. Vol. 8. Wiley New York.

Goldstein, H., and G. Maier. 2010. “The use and valuation of journals in planning scholarship: Peer assessment versus impact factors.” Journal of Planning Education and Research 30 (1): 66.

Linton, J. D, R. Tierney, and S. T Walsh. 2011. “Publish or Perish: How Are Research and Reputation Related?” Serials Review.

MacRoberts, M. H, and B. R MacRoberts. 1989. “Problems of citation analysis: A critical review.” Journal of the American Society for Information Science 40 (5): 342-349.

———. 1996. “Problems of citation analysis.” Scientometrics 36 (3): 435-444.

Marchionini, G. 2010. “Information Concepts: From Books to Cyberspace Identities.” Synthesis Lectures on Information Concepts, Retrieval, and Services 2 (1): 1-105.

Moed, H. F. 2005. Citation analysis in research evaluation. Vol. 9. Kluwer Academic Pub.

Stiftel, B., and R. Mogg. 2007. “A planner’s guide to the digital bibliographic revolution.” Journal of the American Planning Association 73 (1): 68-85.

Stiftel, B., and C. Mukhopadhyay. 2007. “Thoughts on Anglo-American hegemony in planning scholarship: Do we read each other’s work?” Town Planning Review 78 (5): 545-572.

Next installment: "Visibility of Planning Gray Literature"

Tuesday, August 20, 2013

Share your presentations!

There are several places to share your presentation slides. Popular

sites include Slideshare, Authorstream, Slideshow, Scribd,

and many more (see: Mashable and Hellobloggerz

for more complete listings). But why

should academics share these? One reason is that much time is spent preparing slides

for conference presentations, research talks, and classes, and they tend to

digest a lot of in-depth information and analysis that are products of current

research efforts. Many times these

presentations summarize research papers and reports which are ultimately

published in both digital and hard-copy formats. Presentations are also a form

of scholarly communications, which like other products, should be shared.

Sharing presentations is yet another way you can make your work visible

online. Just as academics and research

professionals search for scholarship in the form of books, chapters, articles,

and white papers, they are also looking for presentation materials to reference

and adapt. Obviously citation practices

for presentation slides are different from those of traditional scholarship,

but hopefully yours will be duly cited by those who come across yours.

A very good example is Laura Czernoewicz's presentation on Slideshare. She provides some excellent information about

online visibility in slide form. These

types of presentations can also be more condensed and helpful than having this

same information in article form. Good

presentations are hard to create, but done well, can be a good way to promote

your interests and research in an accessible and interesting way. In her case, some of the infographics are intriguing

and provide good summary statistics and sources for data.

Other nice examples are John Tennant's presentations on "Social media

for academics" and "Blogging for

academics" using Prezi. Posting

these online also allow easy sharing through social media channels like

Facebook, Twitter, LinkedIn, and email.

Most sites provide the ability to leave comments, likes, and favorites -

which are good metrics to keep track of along with the number of views and

downloads.

University libraries are great resources, such as the open.michigan site at the University of

Michigan. As they say, "Share with

the World". They link to Slideshare

and Scribd on their site as recommended places to post presentations. University libraries are experts about how

(and where) to share scholarship like many already do around open access

portals/publishing and scholarly repositories.

Posting your presentations makes you and your work more visible online

and helps to increase your scholarly impact.

Saturday, July 20, 2013

Twitter for Academics

While Twitter usage remains low among academics, microblogging is

likely to increase over time. Those using it are finding there are

particular benefits, especially in association with other social media

channels. The 140 character limitation means that Twitter is best used to

announce publications, events, and new research - but obviously unable to

provide much information other than links to blogs, websites, or documents that

have been posted to the web. But this serves to increase the visibility

of your work if used strategically. The following will briefly mention

how Twitter can be used by academics for scholarly communications by drawing

upon some existing web resources.

The blog, Savage Minds Backup, mentions a few specific benefits of Twitter such as:

If you are an existing user of Twitter, you will recognize that these benefits take time and are a function of building a group of followers interested in your content. You’ll likely find many Twitter users with similar research interests who will not only be interested in what you are posting, but also help to build your base of followers by retweeting and favoriting what you have to say.

The blog, Savage Minds Backup, mentions a few specific benefits of Twitter such as:

- Announcing new research, publications, conferences, and discussion

- Community building

- Access to archived conversations

- Personal curation of scholarly materials

- Rapid dissemination

If you are an existing user of Twitter, you will recognize that these benefits take time and are a function of building a group of followers interested in your content. You’ll likely find many Twitter users with similar research interests who will not only be interested in what you are posting, but also help to build your base of followers by retweeting and favoriting what you have to say.

A few LSE

reports provide some excellent information on using social media, such as

Twitter. The report provides six helpful

suggestions about Twitter, which help to improve the effectiveness of your

tweets. These also demonstrate how

Twitter work in combination with other types of communications and social

media.

- Follow others with similar interests

- Promote your Twitter profile on your email signature, business card, blog, presentations, etc.

- For research projects, tweet about progress and not just the completed project

- Point to other materials like presentations at scribd, authorstream, slideshare, etc.

- Use it with blogging to promote blogs and allow others to share your content

- Use it for classes to communicate with students for updates, announcements, etc.

Another resource is Kathrine Linzy’s “Twitter

for Academics”. Along with some of

the other Twitter concepts previously mentioned, a few that she offers include:

- Use Twitter to publicize your work

- Point to other resources (links, tweets, documents, etc.)

- Consider your on-line personality/identity

Your overall process and online strategy help to develop your

professional identity, which means that you should consider whether your

audience will be academics in your discipline or a broader audience. Many

academics choose to keep personal and professional accounts separate due to the

nature of the content and whether it is being used to enhance their

professional identity and academic visibility.

Using other scholarly communications tools such as Mendeley, Google

Scholar Citations, CiteULike, ResearchGate, Academia.edu, LinkedIn, or Facebook

are useful in conjunction with Twitter. Several of these can be used to store

and track your citations which are good ways to share your scholarship. Tracking activity through these platforms is

the basis of “altmetrics” which will be discussed in a later post.

Note: See also How

to Use Twitter for Business (HubSpot). It is directed at business but the

same concepts apply to scholarly communications.

Thursday, November 29, 2012

The (Coming) Social Media Revolution in the Academy

A revolution in academia is coming. New social media and other web

technologies are transforming the way we, as academics, do our job.

These technologies offer communication that is interactive,

instantaneous, global, low-cost, and fully searchable, as well as

platforms for connecting with other scholars everywhere. Read more...

Subscribe to:

Comments (Atom)